DockerWinter 2026

EECS 388 uses Docker to simplify setup and maintain a common working environment across a wide variety of student machines. Projects 1, 2, 3, and 5 distribute development containers with all necessary tools included to complete the projects pre-installed, and Projects 3 and 5 also distribute GUI applications for use through Docker.

With Docker, there is no more time spent configuring your environment—simply clone the starter files, press a button in Visual Studio Code, and you’re up and coding.

Introduction

Docker is a container framework. It helps you manage and run multiple containers in parallel with your host computer. Each container is an isolated environment with its own filesystem, programs, processes, and libraries, a bit like virtual machines, but since they can share resources while staying isolated, they tend to be more efficient. Containers are built from images, which are like snapshots of a computer frozen in time. It’s easy to send these images around, allowing the exact same environment to be deployed on multiple machines, even if the hosts are wildly different.

VS Code has an extension that makes it really easy to use a Docker container as your development environment. Once you download the starter code, all you have to do is press a button and it will start container with all the tools you need for the project already installed. The files in your project will be instantly synced between your host computer and the container, and any commands you run in the terminal will happen inside the container. Although it is possible to complete this class without VS Code, we recommend it to all students because it makes managing development containers so much easier, especially if you don’t have any experience with Docker.

Installing Docker

The first step in using Docker is installing it. Navigate to the

Docker Desktop download page,

and download the file corresponding to your current machine (the “host” machine)’s operating system.

For macOS users, it is a typical .dmg installer, and for Windows users,

an .exe installer is included that performs the installation process.

For Linux users, Docker provides packages for multiple distributions;

more information is included in their guides. (We do assume some Linux knowledge

for students who choose to maintain a Linux host in this guide,

but if you’re having any difficulties, feel free to reach out to us.)

If you are installing Docker on a Linux host, you should instead install Docker Engine, not Docker Desktop. Docker Desktop seems to cause permissions issues with the volume mounts used in development containers. Docker Engine will still work in a desktop Linux environment.

If you use Windows Subsystem for Linux (WSL), you should follow the instructions for installing Docker Desktop on Windows, not Linux.

After installing Docker, you should be able to run its hello-world image

to verify that your installation is working correctly:

$ docker run -it --rm hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

b8dfde127a29: Pull complete

Digest: sha256:9f6ad537c5132bcce57f7a0a20e317228d382c3cd61edae14650eec68b2b345c

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

As the note explains, Docker just did a lot behind the scenes! If you see a similar message, congrats—you are fully ready to start on Project 1!

Running Visual Studio Code in a Container

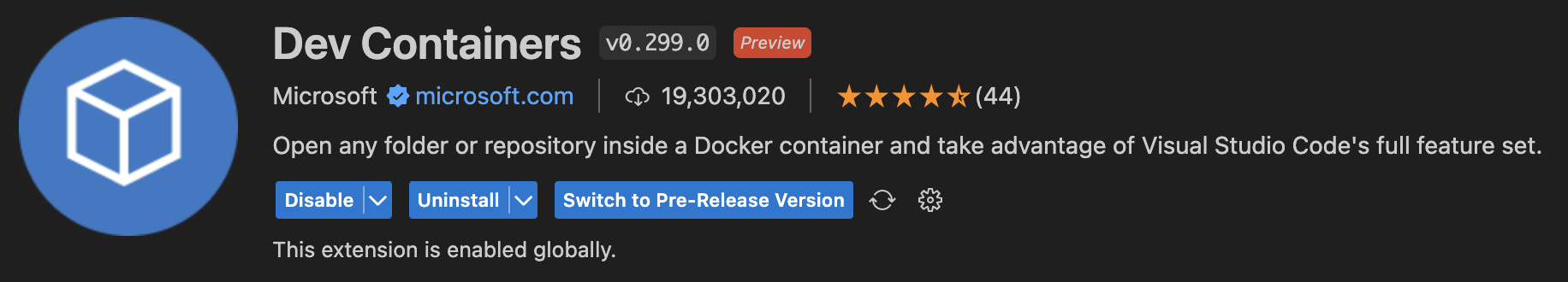

After installing Docker and cloning the Project 1 starter files, search for and install the “Dev Containers” extension in the VS Code marketplace:

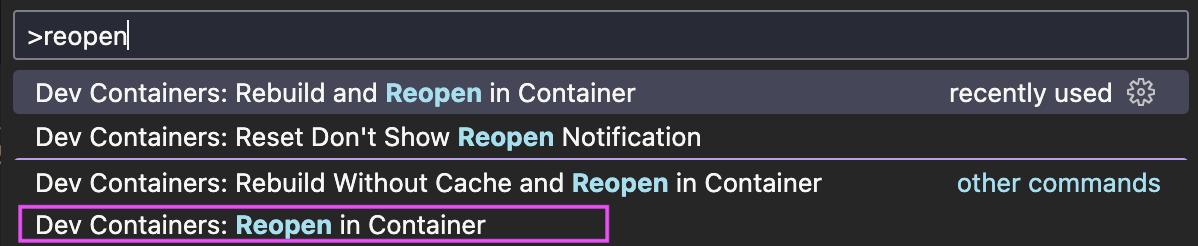

After installing this extension, you may receive a pop-up message to open the current project in a container; if not, open the Command Pallette (CTRL+SHIFT+P on Windows/Linux; ⌘+⇧+P on macOS), then type “reopen in container” and select the command:

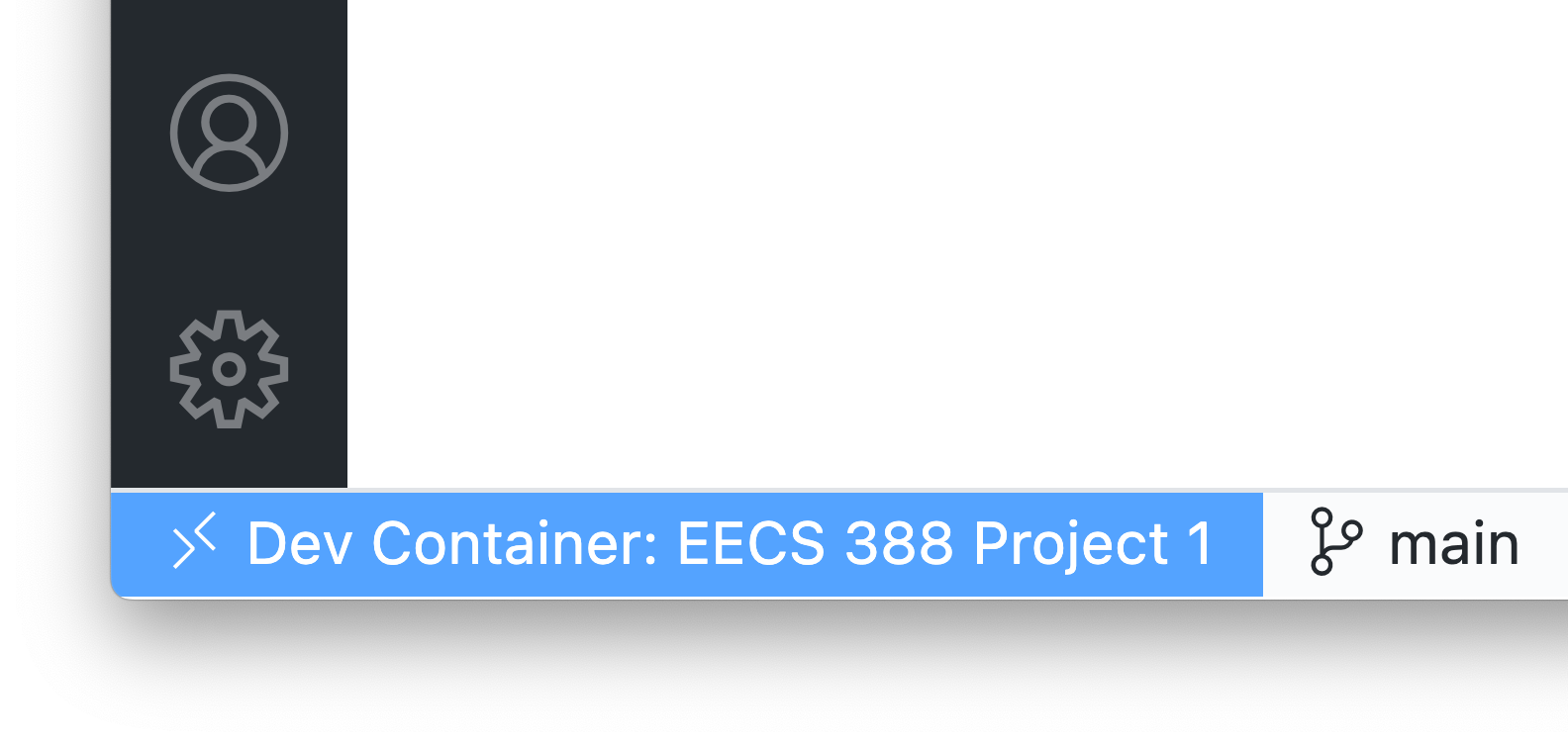

Your VS Code window will close and reopen, and the first time you do this, it may take a few minutes to build the container; its status can be followed in the bottom right of the window. After this finishes, you’ll be greeted with a relatively normal-looking VS Code window, with an exception—looking at the bottom left of the window, you should notice that your environment has changed to “Dev Container”:

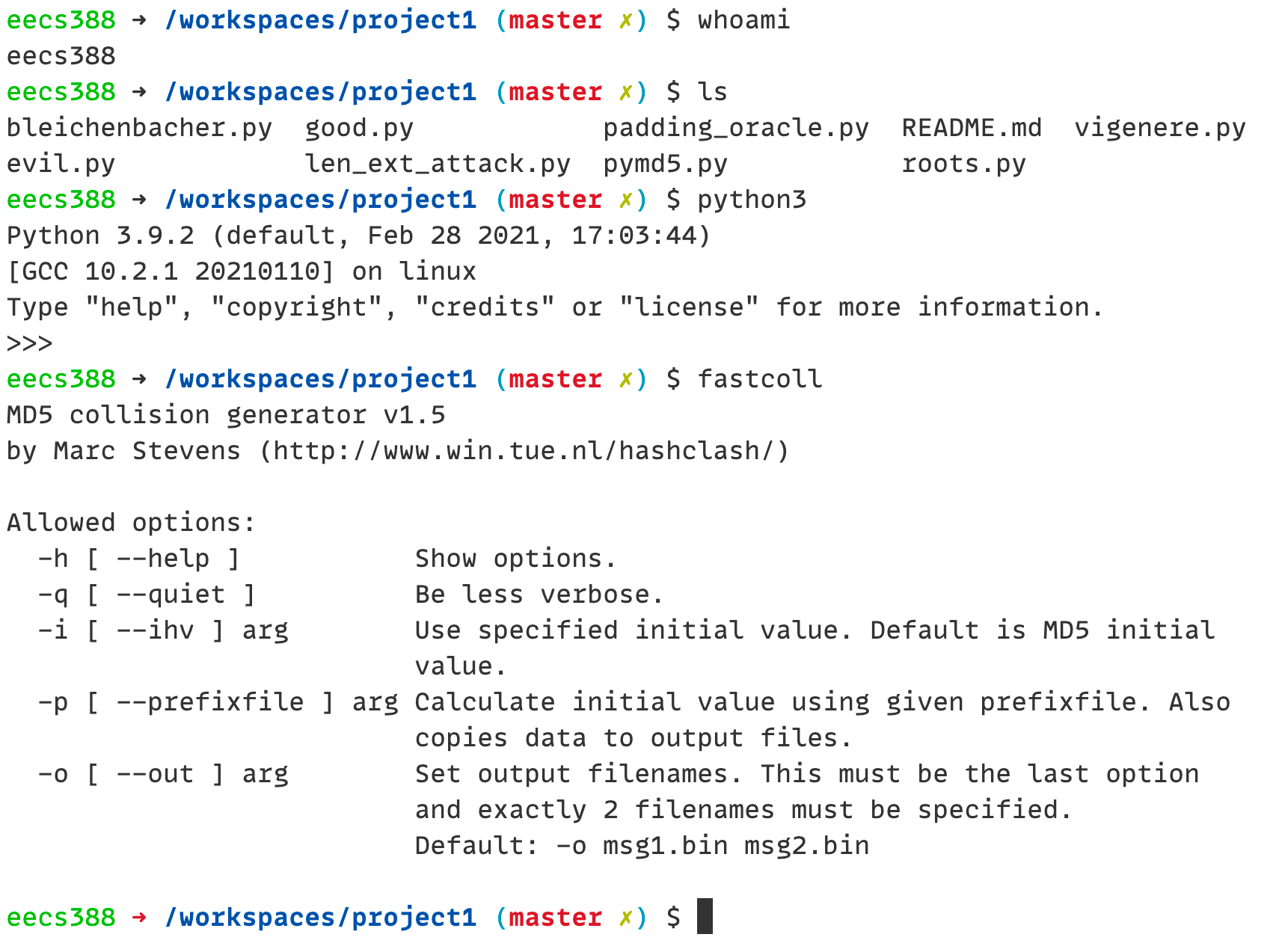

Congrats—that was it; you’re now working within the container! If we open up a terminal in the window, we can further see that we’re in the container:

Any edits you make to the directory within the container will be reflected on your host machine,

and you can even commit from within the container! (The Dev Containers extension will

even copy your .gitconfig file from your host machine so that you keep the same

name and email on your commits from within the container; this is really so cool!)

In addition to editing files and using a terminal in the container,

VS Code also supports debugging code in the container out of the box.

We have provided starter launch.json files for projects where debugging using an IDE makes sense;

you might need to fill in a few values to get started, but after that,

debugging your code will work just like you may have done in EECS 280 or 281.

For future projects, you’ll simply need to perform the “reopen in container” step again.

Customizing the Container

While we have included everything necessary for each project, as well as some niceties

like Git, curl, GCC, jq, and others in each project’s container, we know that there might be

some specific tool that you use in your development workflow that we’re missing.

Unfortunately, because containers are built from an exact image, any changes you make to the system

from within the container may be lost at any time.

Luckily, you can still customize the image, and thus the container, further! In each project’s starter files,

you’ll find a .devcontainer folder containing a Dockerfile; here, you can see that

we use a base image of eecs388/projectN, but also give you room to add your own statements.

You can run commands in the container with RUN; for example, if we wanted to install Emacs

inside the container (are you reading this, Professor DeOrio?), we could add the following line:

RUN sudo apt-get update && sudo apt-get install -y emacs

As you might have been able to guess from this line,

the base image used by all of our development containers is Debian Linux.

The update is necessary as Docker doesn’t save some ephemeral files in order to save space;

package lists are one such example.

For each project, we will also include guidance for things we do NOT want you to do in the Dockerfile—for

example, in Project 1, we note that you should not be installing any additional Python modules

via pip, as you will only have access to the modules which we’ve already installed

when your code is being graded. Customization allows you to install some additional niceties

in the container, but it does not affect the environment in which your code is graded—keep

that in mind.

Reclaiming Resources

Once a project is finished, it can be a good idea to delete its images and containers. It’s not required, but it allows you to reclaim any disk space they were using and ensures they won’t start running in the background when you don’t want them to.

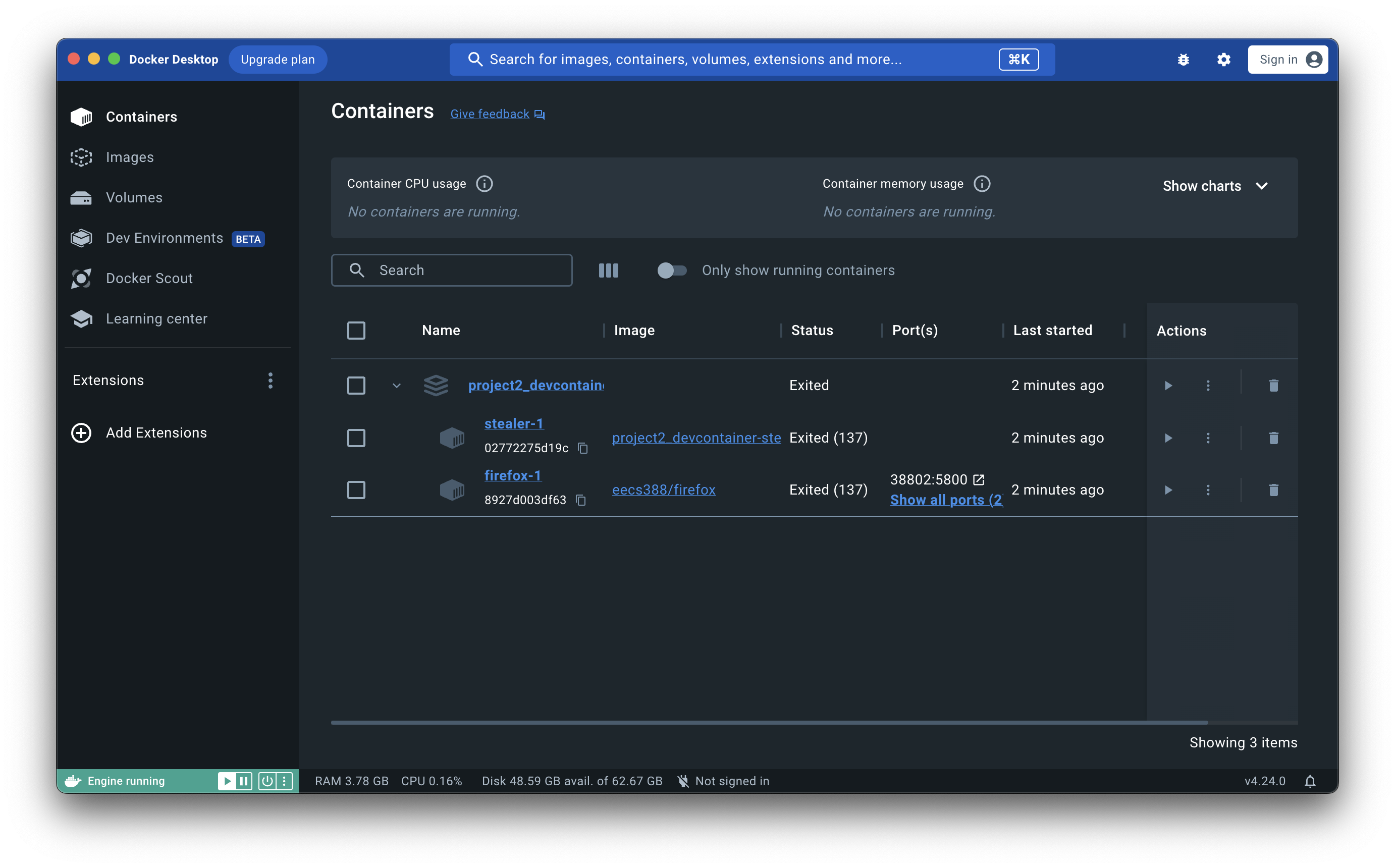

First, delete the containers:

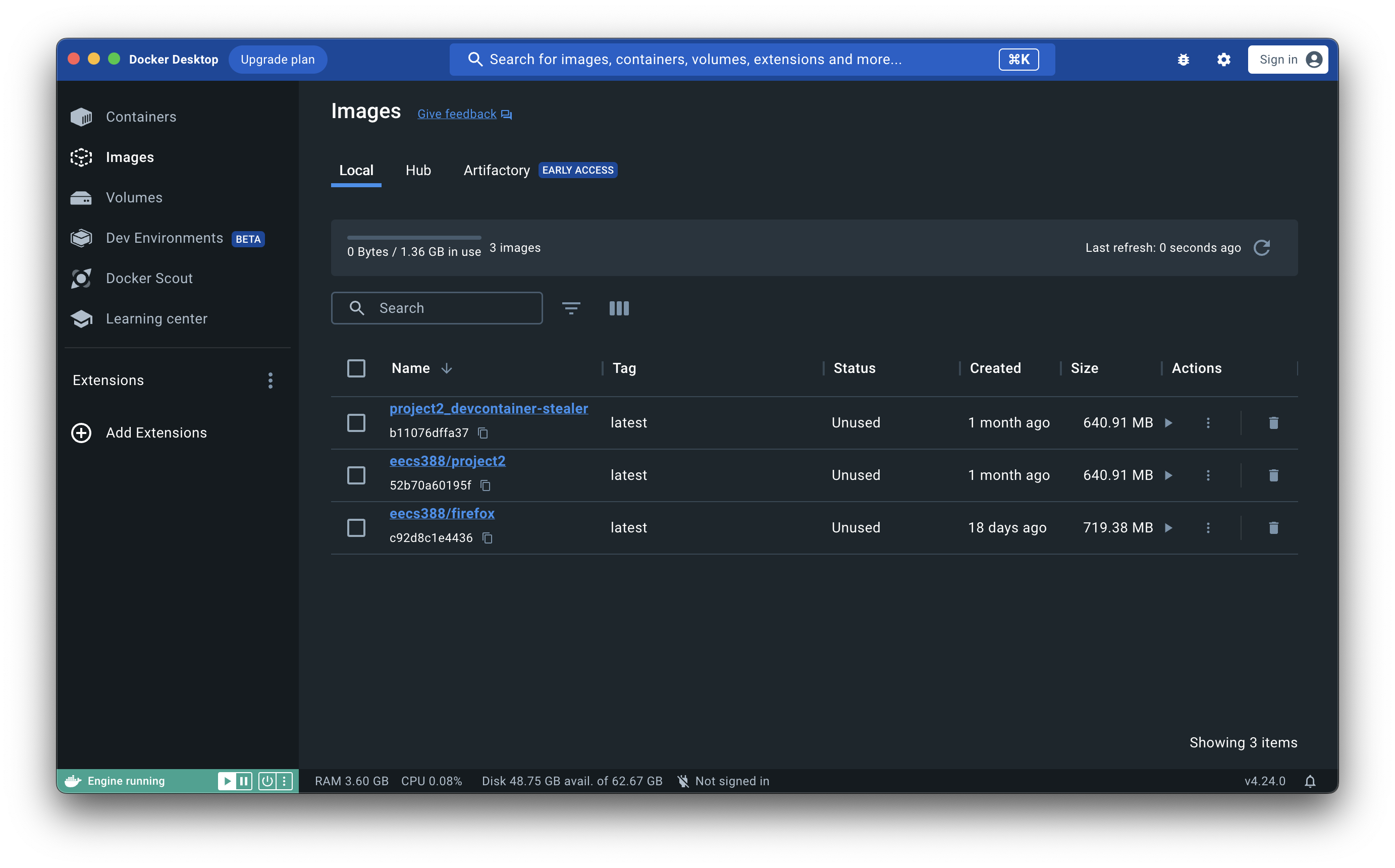

Next, delete the images:

Finally, run the command docker system prune. This tells Docker to actually erase all the things you’ve just deleted

so that they aren’t cached in the background.

If you’re not using Docker Desktop, check the Docker CLI reference

for how to delete images and containers, then also run the docker system prune command.